Key Takeaways

- Batch inference with serverless GPUs optimizes large-scale data processing by enhancing throughput, reducing computational time, and eliminating infrastructure overheads.

- Serverless GPUs provide scalable, cost-effective solutions for GPU-intensive tasks, enabling businesses to dynamically allocate resources without heavy hardware investments.

- The future of batch inference with serverless GPUs will focus on automation, AI-driven analytics, and improved scalability, transforming data processing and decision-making across industries.

Introduction to Batch Inference

In the dynamic realm of data science, batch inference stands out as a method geared towards optimizing the efficient processing of large datasets. Focusing on deriving predictions from data in clusters rather than individually enhances throughput and reduces computational time significantly. Batch inference is pivotal in numerous fields, including finance, healthcare, and marketing, where processing vast data volumes swiftly can directly impact outcomes and strategies. Utilizing Batch inference with serverless GPUs: Best practices ensure that enterprises can harness cutting-edge computational power without delving into intricate infrastructure management, thereby promoting agility and quick adaptability to market changes.

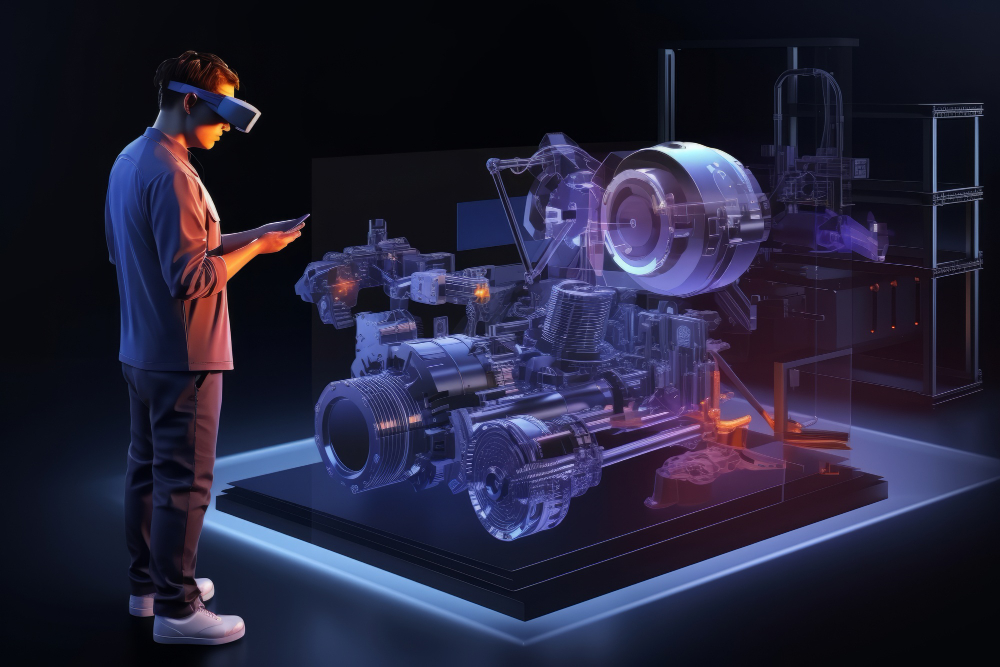

The Role of Serverless GPUs

The advent of serverless GPUs marks a significant evolution in the computational landscape, offering a potent solution for businesses aiming to leverage GPU-intensive tasks without the associated overheads. These GPUs provide scalable, instant access to powerful resources, making them an ideal choice for tasks such as deep learning, training neural networks, and performing batch inference on extensive data sets. Unlike conventional setups that require significant investment in hardware, serverless GPUs eliminate infrastructural constraints by provisioning resources on demand. This setup not only reduces costs but allows organizations to scale operations seamlessly, thereby enhancing resource allocation and operational efficiency. As a result, businesses can direct their focus on model development and data insights, ensuring a more agile response to evolving analytics needs.

Key Benefits of Using Serverless GPUs for Batch Inference

- Cost-Effectiveness: A primary advantage lies in the economic model of pay-as-you-go, which aligns expenditures directly with usage. This approach prevents unnecessary costs, fostering a more sustainable financial model for computational projects.

- Scalability: Batch processing demands can fluctuate significantly, and serverless GPUs provide the flexibility to meet these demands by scaling resources instantaneously. This capability ensures that the system can handle peak loads without lag, thus maintaining performance consistency and efficiency.

- Flexibility: The dynamic nature of business intelligence demands tools that can adapt quickly to new data inputs and machine learning models. Serverless GPUs offer this flexibility, facilitating continuous improvement and rapid deployment of new models and insights without altering the existing setup extensively.

Getting Started: Setting up Environment and Tools

The journey into batch inference with serverless GPUs begins with establishing the right environment, wielding a profound impact on the overall process efficacy. Initiating this requires securing access to a cloud service offering serverless GPUs. This setup acts as a springboard for further developments, ensuring your infrastructure is robust and versatile. Beyond acquiring access, you’ll need to install essential software like Python and relevant machine learning libraries, such as TensorFlow or PyTorch, which are indispensable for handling data formatting, model training, and analysis tasks. These tools are the building blocks that support the batch inference process, making software configuration a critical step in preparing for large-scale data operations.

Step-by-Step Guide to Batch Inference with Serverless GPUs

- Data Preparation: Before any processing begins, data must undergo meticulous preparation. This involves cleaning and organizing the data to ensure it’s in the optimal format for machine learning models. Such preparation is crucial as it directly impacts the accuracy and quality of the inference results.

- Model Selection: Selecting the right model is paramount to achieving desired outcomes. You can either employ a pre-trained model if it fits the task or refine it using your domain-specific data. This ensures relevance and accuracy in predictions.

- Environment Configuration: Optimize your serverless infrastructure by adjusting computational parameters and setting limits that align with your project’s scope. This step balances cost efficiency with performance, extracting the maximum potential from your computational resources.

- Executing Batch Inference: Deploy the chosen model within your serverless environment and initiate the batch inference process. This execution phase is critical for utilizing computational resources effectively while delivering results that meet operational expectations.

- Result Evaluation: Post-processing analysis is vital in ensuring the success of batch inference. Evaluate accuracy, performance metrics, and anomalies to iterate and enhance future model training and data processing strategies.

Challenges and Considerations

Despite the myriad advantages, incorporating batch inference with serverless GPUs involves addressing several challenges. Ensuring data quality is paramount, as inaccurate data can lead to flawed outputs. Furthermore, managing model updates efficiently is essential to mitigate latency issues that could impact performance. Security also stands as a significant concern, with robust data protection practices required to safeguard sensitive information. Addressing these issues involves implementing data governance policies and leveraging robust encryption and security protocols to maintain data integrity.

Future Trends in Batch Inference with Serverless GPUs

As technology continues to evolve, the integration of batch inference with serverless GPUs is set to drive numerous innovations. Trends suggest an uptick in automation and AI-driven analytics that streamline data processing pipelines and enhance scalability. The computational efficiency promised by these advancements is poised to redefine traditional data processing paradigms, making data-driven decision-making more intuitive and accessible. As industries embrace these innovations, businesses can anticipate improved performance, richer insights, and sustained competitive advantages.

Conclusion: The Impact of Serverless GPUs on Data Processing

The integration of serverless GPUs in batch inference has fundamentally transformed data processing capabilities, offering unparalleled flexibility and efficiency. By understanding and leveraging these technologies, enterprises gain not only enhanced predictive capabilities but also the ability to innovate and adapt swiftly in a fluctuating digital landscape. As organizations continue to redefine their operational frameworks around batch inference, the symbiotic relationship between serverless computing and data analytics cements its role as a cornerstone of modern data strategy.